Nowadays, neuroscience, anthropology and philosophy converge in considering the activity of hand and touch as essential in the development of superior cognitive faculties like memory, imagination, language.

The hand, more than the brain, has shaped language and culture.

That the hand may determine and anticipate cognition is not “the innocuous and obvious claim that we need a body to reason; rather, it is the striking claim that the very structure of reason itself comes from the details of our embodiment. The same neural and cognitive mechanisms that allow us to perceive and move around also create our conceptual systems and modes of reason“ (Philosophy in the flesh: the embodied mind and its challenge to western thought, G. Lackoff, M. Johnson, Basic Books, 1999).

Thus, to completely understand intelligence, we must first understand the details of our hand’s sensorimotor system. In Robotics, it is quite tempting to think of a similar hypothesis, namely that the design of mechanical hands, including the principles of low‐level sensing andcontrol, will shape, at least in part, the development of the field of artificial intelligence at large. Central to our research is the concept of constraints imposed by the embodied characteristics of the hand and its sensorimotor apparatus on the learning and control strategies we use for such fundamental cognitive functions as exploring, grasping and manipulating.

From our viewpoint, such constraints are not merely bounds that limit performance, but rather are the dominating factors which affected and effectively determined how cognition has developed in the unique, admirable form we are able to observe on Earth – hence, they really are to be considered “enabling constraints” , caled synegies, which organize the hand embodied.

Motor and sensory synergies are also our two key ideas for advancing the state of the art in artificial system architectures for the “hand” as a cognitive organ: we learn from human data and hypotheses‐driven simulations how to better control, and even design, robot hands and haptic interfaces.

Haptic Perception and Virtual Reality

The sense of touch is an astonishingly complex and heterogeneous information generator. This information is essential for humans, and animals in general, to properly interact with the others and the external world, and hence to survive. As Aristotle affirmed in his de Anima: ‘’…Without touch it is impossible for animals to exist...the loss of this one sense alone must bring death…’’. Therefore, it is not surprising that a thorough research effort has been spent to study such a richness and to replicate it through artificial systems, with the goal of enabling effective human-machine communication. These systems are defined as haptic interfaces (haptics from the Greek word haptesthai, related to touch): i.e. mechatronic devices equipped with sensors, actuators and control mechanisms, which can sense touch-related information arisen from the interaction with the environment (real or virtual), and convey it to the user through haptic stimuli.

The activities of the Haptics research group can be grouped into four main areas:

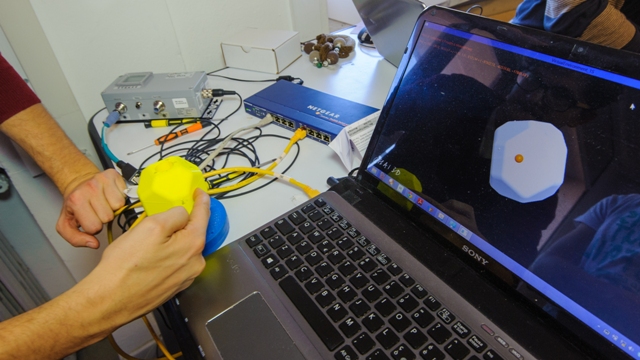

- Modelling of sense of touch and human behavior: leveraging upon the mutual inspiration between neuroscience, mathematics and robotics, the objective is to devise mathematical models and abstractions to represent haptic interaction in humans from psychophysical experiments and kinematic and kinetic recordings of human motor and manipulation activities. Such abstractions will be hence used to inform the development of simple and effective artificial systems.

- Wearable and grounded haptic interfaces: the goal is to render haptic interaction and effectively convey tactile stimuli to users through mechatronic devices, i.e. haptic interfaces, in many applications including tele-robotics, virtual reality, prosthetics, assistive and rehabilitation robotics, and robot-assisted surgery. A special class of devices is represented by affective haptic systems, i.e. devices specifically designed to elicit emotion-related responses in users. Indeed, in addition to its discriminative role in shaping our knowledge of external world, touch acts also as an emotion amplifier for social communication.

Wearable Fabric Yielding Display - Best Paper Award Haptcs Symposium 2016

- Human and robotic sensing: sensors to measure forces, torques and accelerations in robots and human users, as well as wearable gloves to reconstruct human hand posture represent the core elements of this research area. Such an information can be then conveyed back to the user through haptic interfaces. One of the leading idea, together with neuroscience-driven inspiration, is under-sensing, i.e. the usage of a limited number of sensing elements. Applications are in autonomous robots (reactive grasp), prosthetics, human investigation and virtual reality.

Development of Robotic Hands

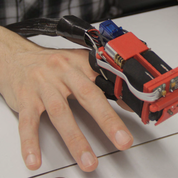

PISA-IIT SoftHand: One of the motivations behind the development of humanoid robots is the will to comply with, and fruitfully integrate in the human environment, a world forged by humans for humans, where the importance of the hand shape dominates prominently.

PISA-IIT SoftHand implements the concept of adaptive synergies for actuation with an high degree of integration, in a humanoid shape.

The Pisa/IIT SoftHand is simple, robust and effective in grasping.

Simple because only one motor actuates the whole hand;

Robust because suitable joint design allows for disarticulation;

Effective thanks to the soft-synergy idea applied to 19 degrees of freedom

The idea at the core of the Pisa/IIT SoftHand, i.e. soft synergies, comes from the combination of natural motor control principles. As a result the hand pose is not predetermined, but depends on the physical interaction of its body with the environment, allowing to grasp a geat variety of objects despite its single degree of actuation.

Simplicity, robustness, lightness and effectiveness make the Pisa/IIT SoftHand ideal for both humanoid robotics and prosthetics.

the recent improvement, Pisa/IIT Softhand PLUS, is actuated by two motors for to perform not only grasping, but also complex manipulations on objects.

Winner of the Best paper Award at Humanoids 2012: "Adaptive Synergies for a Humanoid Hand", M. G. Catalano G.Grioli A. Serio E. Farnioli C. Piazza A. Bicchi

Best Poster Award @EuroHaptics 2014: "A change in the fingertip contact area induces an illusory displacement of the finger", Alessandro Moscatelli, Matteo Bianchi, Alessandro Serio, Omar Al Atassi, Simone Fani, Alexander Terekhov, Vincent Hayward, Marc Ernst and Antonio Bicchi.

Best Interactive paper award at Humanoids 2015 "Dexterity augmentation on a synergistic hand: the Pisa/IIT SoftHand+", C. Della Santina, G. Grioli, M. Catalano, A. Brando, A. Bicchi .

UNIPI team Finalist at the Amazon Picking Challenge

Projects:The Hand Embodied,SoftHands, WearHap, SOMA, SoftPro

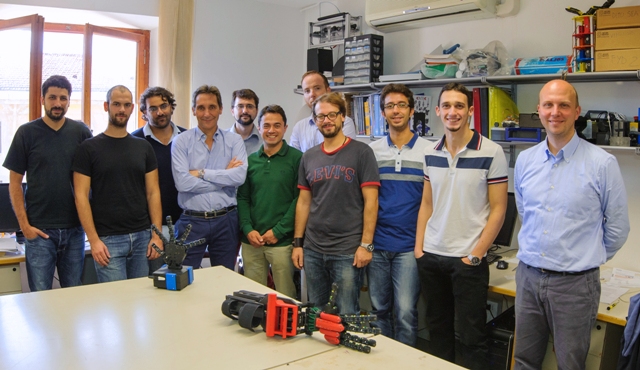

The first prototypes

Centro "E.Piaggio" has developed prototypes of robotic hands for studying synergies from the software and hardware points of view. The first prototype, called THE Zero Hand, is a tree finger robotic hand with hardware implementation of synergies. The second prototype, called THE First Hand, is a five finger robotic hand with 16 degrees of freedom (DoFs). The First Hand is a fully actuated hand and was built to study synergies from software point of view.

THE Zero Hand is a three finger robotic hand where synergies are implemented by pulleys mechanism. UNIPI has chosen to design a robotic hand with less DoAs than DoFs exploiting mechanical synergies expressly implemented on this hand. Another design aim was relative to the modularity: the fingers of THE Zero Hand are composed by a chain of the same joint and they can be assembled, theoretically, in a sequence of many module, eluding, in a certain sense, the common design of a robotic hands fingers. Every joint of THE Zero Hand has the same structure and layout: one of principal aim of the joint design was, indeed, the modularity. In this way it is possible to extend and arrange the robotic finger as modular structure of the same basic component. Every joint has a recoil spring in order to use only one tendon for the movement. This approach allows to use a low number of tendon simplifying the tendon routing.

THE Zero Hand is a three finger robotic hand where synergies are implemented by pulleys mechanism. UNIPI has chosen to design a robotic hand with less DoAs than DoFs exploiting mechanical synergies expressly implemented on this hand. Another design aim was relative to the modularity: the fingers of THE Zero Hand are composed by a chain of the same joint and they can be assembled, theoretically, in a sequence of many module, eluding, in a certain sense, the common design of a robotic hands fingers. Every joint of THE Zero Hand has the same structure and layout: one of principal aim of the joint design was, indeed, the modularity. In this way it is possible to extend and arrange the robotic finger as modular structure of the same basic component. Every joint has a recoil spring in order to use only one tendon for the movement. This approach allows to use a low number of tendon simplifying the tendon routing.

In order to apply synergies in grasping experiments, UNIPI has developed a low cost prototype of robotic hand called THE First Hand: the motivation is the study of synergies from both software and control point of views. The hand was designed in order to reproduce the kinematic structure of a human hand with the same general dimensions. The hand has 5 fingers driven by tendons for a total of 16 Degrees of Freedoms.

THE First Hand: schematic of the robotic hand salient features (on the right side) and real prototype aspect (on the left side)

THE First Hand has been developed to study synergies from software point of view. Indeed, this robotic hand is intended as a study platform for mapping synergies and for studying robotic grasp. For these purposes, THE First Hand was build respecting a simple and low cost design paradigm in order to have a robust, quick and simple to use prototype. Another design target was connected to the prototype size and movement capability. UNIPI has chosen to build a robotic hand very similar to a human hand as far as dimensions and movements are concerned, in a sort of bio-replication design paradigm.

THE First Hand grasps trials: scissor (left side), syringe (middle) and stapler (right side)